SC22 It’s safe to say liquid cooling was a hot topic at the Supercomputing conference in Dallas this week.

As far as the eye could see, the exhibition hall was packed with liquid-cooled servers, oil-filled immersion cooling tanks, and all the fittings, pumps, and coolant distribution units (CDUs) you might need to deploy the tech in a datacenter.

Given that this is a conference all about high-performance computing the emphasis on thermal management shouldn’t really come as a surprise. But with 400W CPUs and 700W GPUs now in the wild, it’s hardly an HPC or AI exclusive problem. As more enterprises look to add AI/ML capable systems to their datacenters, 3kW, 5kW, or even 10kW systems aren’t that crazy anymore.

So here’s a breakdown of the liquid-cooling kit that caught our eye at this year’s show.

Direct liquid cooling

The vast majority of the liquid-cooling systems being shown off at SC22 are of the direct-liquid variety. These swap copper or aluminum heat sinks and fans for cold plates, rubber tubing, and fittings.

If we’re being honest, these cold plates all look more or less the same. They’re essentially just a hollowed-out block of metal with an inlet and outlet for fluid to pass through. Note, we’re using the word “fluid” here because liquid-cooled systems can use any number of coolants that aren’t necessarily water.

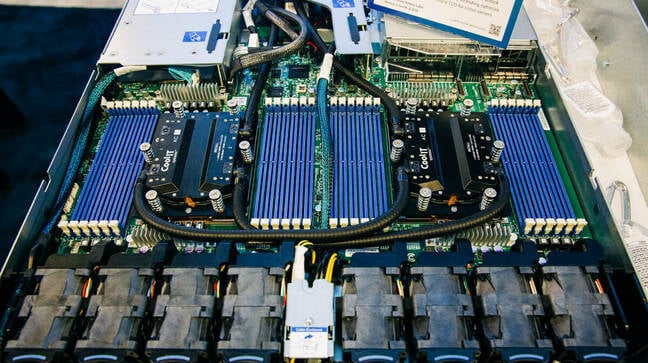

In many cases, OEMs are sourcing their cold plates from the same vendors. For instance, CoolIT provides liquid-cooling hardware for several OEMs, including HPE and Supermicro.

However, that’s not to say there isn’t an opportunity for differentiation. The inside of these cold plates are filled with micro-fin arrays that can be tweaked to optimize the flow of fluid through them. Depending on how large, or how many dies there are to cool, the inside of these cold plates can vary quite a bit.

Most of the liquid-cooled systems we saw on the show floor were using some kind of rubber tubing to connect the cold plates. This means that liquid is only cooling specific components like the CPU and GPU. So while the bulk of the fans can be removed, some airflow is still be required.

HPE demonstrates its latest liquid-cooled Cray EX blades using AMD’s 96-core Epyc 4 CPUs. – Click to enlarge

Lenovo’s Neptune and HPE Cray’s EX blades were the exception to this rule. Their systems are purpose built for liquid cooling and are packed to the gills with copper tubing, distribution blocks, and cold plates for everything, including CPU, GPU, memory, and NICs.

Using this approach, HPE has managed to cram eight of AMD’s 400W Epyc 4 Genoa CPUs into a 19-inch chassis.

A liquid cooled Lenovo Neptune server configured with dual AMD Genoa CPUs and four Nvidia H100 GPUs. – Click to enlarge

Meanwhile, Lenovo showed off a 1U Neptune system designed to cool a pair of 96-core Epycs and four of Nvidia’s H100 SXM GPUs. Depending on the implementation, manufacturers claim their direct-liquid-cooled systems can remove anywhere from 80 percent to 97 percent of the heat generated by the server.

Immersion cooling

One of the more exotic liquid-cooling technologies on display at SC22 was immersion cooling, which has come back en vogue over the past few years. These systems can capture 100 percent of the heat generated by the system.

Rather than retrofit the server with cold plates, immersion cooling tanks, like this one from Submer, dunk them in non-conductive fluid – Click to enlarge

As crazy as it might sound, we’ve been submerging computer components in non-conductive fluids to keep them cool for decades. One of the most famous systems to use immersion cooling was the Cray 2 supercomputer.

While the fluids used in these systems vary from vendor to vendor, synthetic oils from the likes of Exxon or Castrol or specialized refrigerants from 3M aren’t uncommon.

The all liquid-cooled colo facility rush has begun

China’s drive for efficient datacenters has made liquid cooling mainstream

Can gamers teach us anything about datacenter cooling? Lenovo seems to think so

Oil company Castrol slips and slides into immersion cooling

Submer was one of several immersion-cooling companies showing off its tech at SC22 this week. The company’s SmartPods look a little like if you filled a chest freezer full of oil and started vertically slotting in servers from the top.

Submer offers tanks in multiple sizes that are roughly equivalent to traditional half and full-size racks. These tanks are rated for 50-100kW of thermal dissipation, putting them on par with rack-mounted air and liquid-cooling infrastructure in terms of power density.

Submer’s tank support OCP OpenRack form factors like these three blade Intel Xeon systems – Click to enlarge

The demo tank had three 21-inch servers, each with three dual-socket Intel Sapphire Rapids blades, as well as a standard 2U AMD system that had been converted for use in its tanks.

However, we’re told the number of modifications required, especially on OCP chassis, is pretty negligible, with the only real changes being to swap out any moving parts from things like power supplies.

As you might expect, immersion cooling does complicate maintenance and is a fair bit messier than air or direct liquid cooling.

Not every immersion-cooling setup on the show floor requires gallons of specialized fluids. Iceotope’s in-chassis immersion-cooling system was one example. The company’s sealed server chassis function as a reservoir with the motherboard submerged in a few millimeters of fluid.

A redundant pump at the back of the server recirculates oil to hotspots like the CPU, GPUs, and memory before passing the hot fluids through a heat exchanger. There, the heat is transferred to a facility water system or rack-scale coolant distribution units (CDU).

Supporting infrastructure

Regardless of whether you’re using direct-to-chip or immersion cooling, both systems require additional infrastructure to extract and dissipate the heat. For direct-liquid-cooled setups, this may include distribution manifolds, rack-level plumbing, and most importantly one or more CDUs.

Large rack-sized CDUs might be used to cool an entire row of server cabinets. For example, Cooltera showed off several large CDUs capable of providing up to 600kW of cooling to a datacenter. Or, for smaller deployments, a rack-mounted CDU might be used. We looked at two examples from Supermicro and Cooltera, which provided between 80 and 100kW of cooling capability.

These CDUs are made up of three main components: a heat exchanger, redundant pumps for circulating coolant throughout the racks, and a filtration system to keep particulates from clogging up critical components like cold plate micro fins.

How the heat is actually extracted from the coolant system depends heavily on the kind of heat exchanger being used. Liquid-to-air heat exchangers are among the simplest because they require the fewest modifications to the facility itself. The Cooltera CDU pictured here uses large radiators to dump heat captured by the fluid into the datacenter’s hot aisle.

In addition to pumps and filtration this Cooltera CDU features an integrated liquid-to-air heat exchanger. – Click to enlarge

However, the majority of CDUs we saw at SC22 used liquid-to-liquid heat exchangers. The idea here is to use a separate facility-wide water system to move heat collected by multiple CDUs to dry-coolers at the exterior of the building where it’s dissipated to air. Or, instead of dumping the heat into the atmosphere, some datacenters, like Microsoft’s latest facility in Helsinki, have connected their facility water systems to district heating systems.

The situation is largely the same for immersion cooling, though many of the components of the CDU, like the pumps, liquid-to-liquid heat exchangers, and filtration systems, are built into the tanks. All that’s really required is for them to be hooked up to the facility water system.

Liquid-cooling adoption on the rise

While liquid cooling accounts for only a fraction of spending on datacenter thermal management today, hotter components and higher rack-power densities are beginning to drive adoption of the tech.

According to a recent Dell’Oro Group report, spending on liquid and immersion-cooling equipment is expected to reach $1.1 billion or 19 percent of thermal management spending by 2026.

Meanwhile, soaring energy prices and an increased emphasis on sustainability is making liquid cooling appealing on other levels. Setting aside the practicality of cooling a 3kW server with air, 30-40 percent of a datacenter’s energy consumption can be attributed to the air conditioning and air handling equipment required to keep systems at operating temperature.

So while server vendors have found ways to air cool servers up to 10kW, in the case of Nvidia’s DGX H100, at these power and thermal densities there are external incentives to reduce power consumption now used for computing. ®